[video=youtube;TQlu39SIH6M]http://www.youtube.com/watch?v=TQlu39SIH6M&feature=player_detailpage[/video]

Recently, Apple released their new iPad. It is an impressive device and will be a roaring success; however... they also made the wild claim that the A5X is four times faster than the Tegra 3, which had NVIDIA scoffing with a smirk. Now that the device has been released, the "proof is in the pudding" and Laptop Mag pitted the new iPad against a Transformer Prime to find out the veracity of Apple's claims. As it turns out, they are sorta right, from a certain point of view, but only if you bend your thinking in the most narrow way possible.

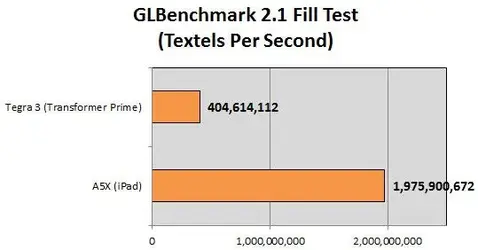

You see, it turns out that the graphics processor in the new A5X is faster than what is found in the Tegra 3 in OpenGL 3D benchmark. In fact, the A5X does render over four times as many texture pixels as the NVIDIA Tegra 3; however, this only translates into twice as many frames per second overall than the Tegra 3. So, ultimately, one small part of the processing power of the A5X is four times faster, but the actual total real world result is only twice as fast. Of course, we have no problem giving credit where credit is due, and the fact that it is twice as fast is a remarkable achievement from Apple. Still, this is only in one particular graphics benchmark.

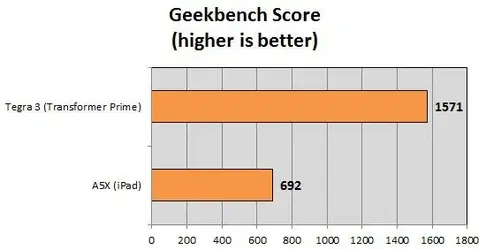

Interestingly, in other benchmarks, the raw computational power of the Tegra 3 was vastly superior to the iPad. The Quad-Core chip in the T-Prime outperformed the iPad in integer, floating point, and memory performance. Finally, things were taken a step further to truly test real world performance, because everyone knows that benchmarking programs don't really show the whole picture. The two tabs were pitted against each other while playing Riptide GP and Shadowgun. In this, (closest to real-world testing), the two tabs were subjectively in a dead-heat. Apparently, the retina display on the iPad is so gorgeous it made the game look fantastic; however, there are some custom graphical flourishes like flowing water and billowing flags that are optimized for the Tegra 3, which make it stand out as well.

Ultimately, the truth in real-world applications tends to diminish all the marketing hype surrounding the release of a new device, and Apple's bold claims prove to be a bit over-hyped in this instance. Of course, this goes both ways, so who knows? When the next Tegra device comes forward, NVIDIA may be singing the same song then as well. Luckily, we the consumer are winners when these folks duke it out over who is the fastest and best!

Source: PhanDroid